How to Find PyTorch that supports your GPU

If PyTorch shows the following messages, then it means your PyTorch doesn’t compile with a CUDA version compatible with your GPU.

1

2

3

UserWarning: GeForce RTX 3090 with CUDA capability sm_86 is not compatible with the current PyTorch installation. The current PyTorch install supports CUDA capabilities ...

RuntimeError: CUDA error: no kernel image is available for execution on the device.

To addrees the problem:

- Go to CUDA GPUs - Compute Capability.

- Open the section the GPU might be in, e.g.,

GeForce RTX 3090is in “CUDA-Enabled GeForce and TITAN Products”. - Find the “Compute Capability” of the GPU, e.g.,

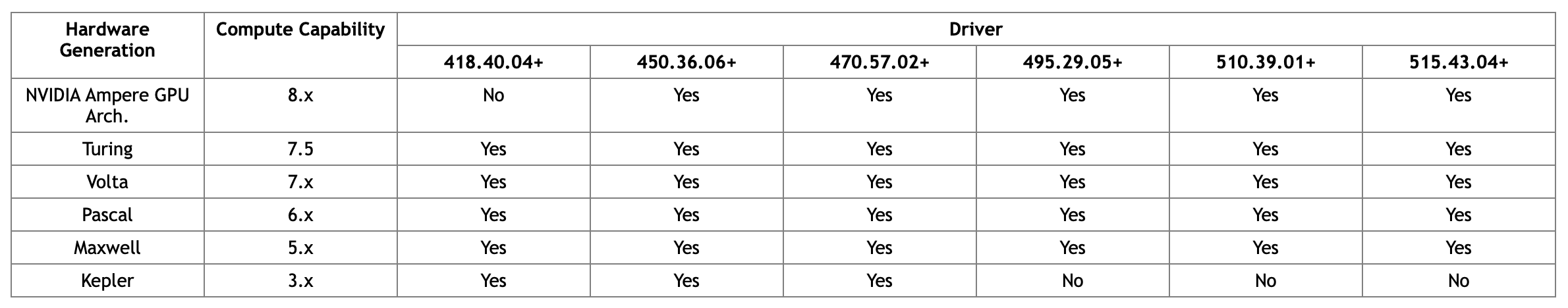

GeForce RTX 3090has the value8.6. - Go to CUDA Compatibility – FAQ -> Which GPUs are supported by the driver?, then find the driver version that supports the “Compute Capability” of the GPU, e.g., the lowest driver version supports

GeForce RTX 3090is450.36.06+.

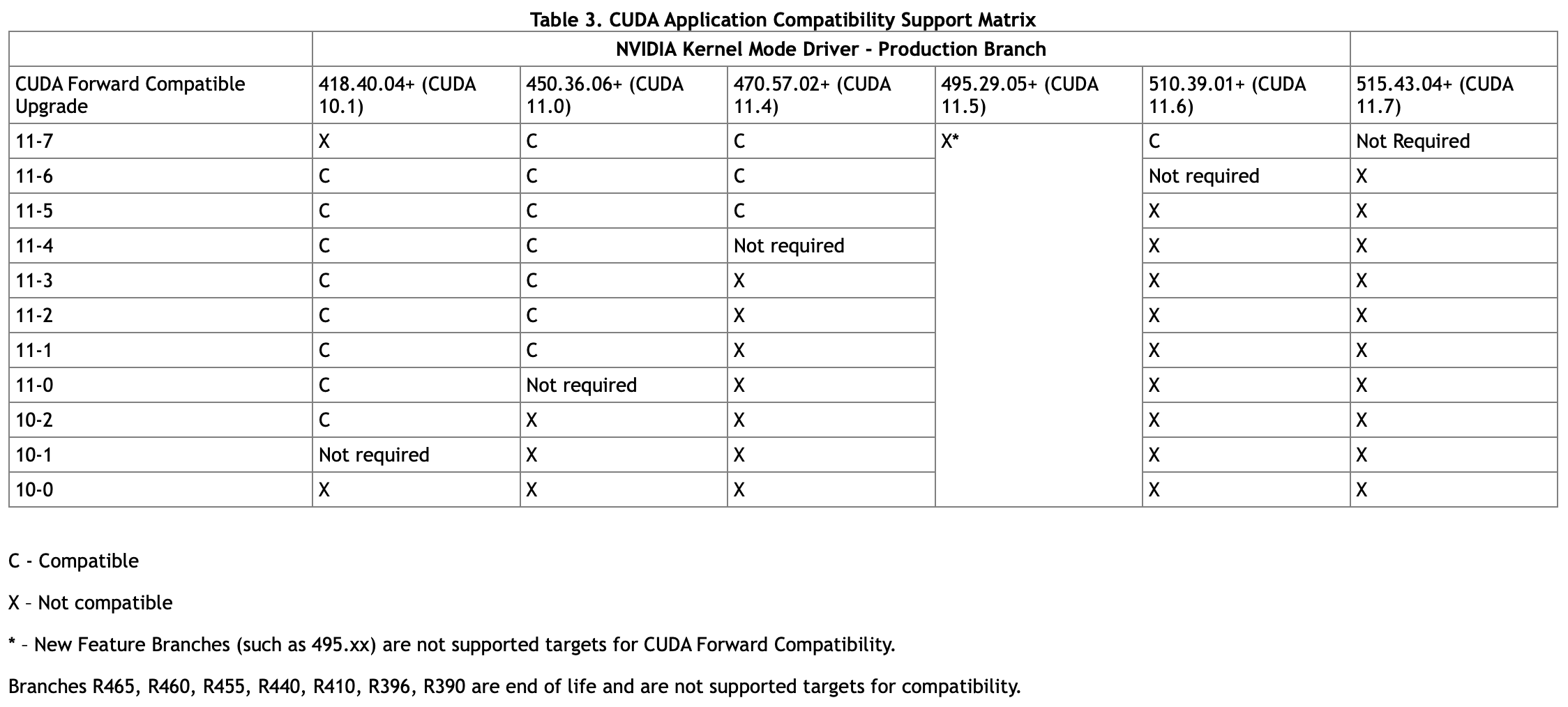

- Go to CUDA Application Compatibility Support Matrix table on the same page, and find the CUDA toolkit version that supports the corresponding driver version, e.g., the lowest CUDA toolkit version for

GeForce RTX 3090, driver450.36.06+, is11.0.

- After found out the Compute Capability and CUDA toolkit version for the GPU, go to PyTorch Stable Wheel. Every wheel with

cuXXXwhereXXXis at least as large as the needed CUDA toolkit version should be usable, e.g., the minimum PyTorch version that supportsGeForce RTX 3090should betorch-1.7.0, since it’s the first one to starts withcu110, which means CUDA toolkit11.0. - Finally, install the PyTorch with the CUDA toolkit version needed with:

1

pip install torch==<torch.version>+cu<XXX> -f https://download.pytorch.org/whl/torch_stable.html

For example, to install PyTorch 1.8.1 with CUDA toolkit 11.1:

1

pip install torch==1.8.1+cu111 -f https://download.pytorch.org/whl/torch_stable.html

後記

把這篇文分享給 lab 的學長之後被狠狠打臉,原來 PyTorch 上就有標註每個 PyTorch 版本支援的 CUDA toolkit version…,我要去波蘭了 QQ